IEEE SMC 2009 Tutorials (in alphabetic order by presenter’s surname)

(1) Title: “Multimedia

Security Systems”

Presenters: Sos Agaian and Dr. Philip Chen

Duration of Tutorial:

Full day

Abstract

The issue of multimedia data security is becoming increasingly vital as

civilization moves closer and closer toward the information age. Creation,

editing, distribution, and storage of digital multimedia data, such as

images, audio, video, and text, have become the major tasks of today’s

computerized systems (cell phones, PDAs, etc.) along with the continuous

availability of Internet, and will continue to be the major driving strength

to the system research and communications in the future. Current advances,

in the digital multimedia processing community, have introduced a wide range

of security aspects on the topics of confidential data transmission and

storage, user identification, and authentication.

This course will present

an overview of the theory and the integrated applications in the secure

communication and information systems. The three main objectives of this

course are: To gain knowledge of the multimedia data system representations;

to ensure security and the integrity of the vital multimedia data through

the concepts of cryptographic and digital data hiding (steganographic,

watermarking) techniques; to utilize these concepts in the real time

applications.

(2) Title: “Brain-Machine

Interfaces”

Presenters: Jose M. Carmena and Jose del R. Millan

Duration of Tutorial:

One-half day

Abstract

In this tutorial we will introduce the exciting new field of

brain-machine interfaces (BMI) and survey the main invasive and non-invasive

techniques employed and their applications.

BMI is a young interdisciplinary

field that has grown tremendously during the last decade. BMI is about

transforming thought into action, or conversely, sensation into perception.

This novel paradigm contends that a user can perceive sensory information

and enact voluntary motor actions through a direct interface between the

brain and a prosthetic device in virtually the same way that we see, hear,

walk or grab an object with our own natural limbs. Proficient control of the

prosthetic device relies on the volitional modulation of neural ensemble

activity, achieved through training with any combination of visual, tactile,

or auditory feedback. BMI has enormous potential as therapeutic technology

that will improve the quality of life for the neurologically impaired.

Research in BMIs has flourished in the last decade with impressive

demonstrations of nonhuman primates and humans controlling robots or cursors

in real-time through single unit, multiunit and field potential signals

collected from the brain. These demonstrations can be divided largely into

two categories: either continuous control of end-point kinematics, or

discrete control of more abstract information such as intended targets,

intended actions, and the onset of movements. In the first part of the

tutorial, Dr. Carmena will cover cortical approaches to BMI with a focus on

bidirectional techniques for decoding motor output and encoding sensory

input. The techniques to be discussed include chronic microelectrode arrays in

animal subjects as well as electrocorticography (ECoG) in human subjects. In

the second part of the tutorial, Dr. Millan will cover non-invasive

approaches to BMI with a focus on brain-controlled robots and

neuroprosthetics. These approaches are based on electroencephalogram (EEG)

signals. As illustrated by some working prototypes such a wheelchair, the

success of these approaches rely on the use of asynchronous protocols for

the analysis of EEG, machine learning techniques, and shared control for

blending the human user’s intelligence with the intelligent behavior of a

semi-autonomous robotics device.

(3) Title: “Challenges in

Network Virtualization”

Presenter: Omar Cherkaoui

Duration of Tutorial:

One-half day

Abstract

This tutorial provides an overview to the discipline of Network

Virtualization (NV).

Previously, Network Virtualization has consisted in deploying network

services (VLAN, VPN, etc) and today it has evolved in the deployment of multiple distinct

networks over the same physical infrastructure. Each network instance

requires a level of isolation from the other instances. This isolation uses

some old OS concepts of virtualization like: Hypervisor (VMM) and

Containers. Furthermore, those concepts use an independent layer for the

control and sharing of resources like network links, CPU, memory,

interfaces, etc. Virtualization has emerged as an active research area. Many

large research projects (GENI, 4ward, Federica, Clean Slate, Horizon, JGN2

Japan) have been launched during the last two years. Those initiatives

mainly try to develop the next generation network based on the network

virtualization concept. Network virtualization will require resolving many

research issues and challenges. We need to know where to push this

virtualization: on which network/equipment and at which layer (L3/L2/L1)? We

also need to determine the right trade‐off between isolation, performance

and flexibility of migration. It means that we need to decide where to push

virtualization: at the data plane, control plane or management plane.

Another approach is to determine if virtualization needs to be established

at the hardware level, OS level or service level. New Infrastructure

virtualization architectures need to be developed. Resource allocation

algorithms will have to be adapted to the network virtual instances. This

virtualization also adds a new level of configuration complexity that

requires resolution. We will review the way the main architectures proposed

by the different projects like GENI, VINI, Find, Clean slate, Horizon, etc.

handle those virtual slices and instances. We will expose different

migration strategies in order to offer resiliency and reliability in this

new virtualized environment.

(4) Title: “Simulation-Based

Engineering of Complex Systems”

Presenter: John R. Clymer

Duration of Tutorial:

Full Day

Abstract

A description of

a new, proven way for engineering complex, adaptive systems is presented,

consisting of a method, a graphical system description language, a

computer-aided design tool, and several illustrative examples. Study of a

large number of complex systems during the last 40 years by Dr Clymer and

others, including computer, transportation, manufacturing, business, and

military systems, has shown that complex systems are best characterized as a

set of interacting, concurrent processes. This discovery inspired the

development of Context Sensitive Systems (CSS) theory, based on mathematical

linguistics and automata theory, as a way of thinking about complex systems

using interacting concurrent processes. During the 1968-1971 time-frame, Dr.

Clymer developed a graphical modeling language, Operational Evaluation

Modeling (OpEM), to express CSS models of both existing and conceptual

systems. During the same time period, an alternative approach, Petri nets,

was developed independently of OpEM. Subsequently, after 20 years of using

procedure oriented simulation programs to design and evaluate complex

systems, a graphical, object-oriented, discrete event simulation library,

OpEMCSS, was developed that works with ExtendSim (Imagine That Inc.) to

enable rapid development of CSS models and simulations in the OpEM language.

Since an OpEMCSS simulation is an abstract description of a complex system,

understanding how the simulation works assists the systems engineer in

understanding how the complex system works, allowing the system design to be

optimized to meet stakeholder requirements. In this tutorial, it is shown

that CSS theory, OpEM modeling language, and OpEMCSS library can be applied

to understand Complex Adaptive Systems (CAS) and to perform Model-Based

Systems Engineering.

Model-Based Systems Engineering (MBSE) mitigates system development problems

(resulting from “stove-piped systems” design methods) that are caused by the

failure to optimize the interoperability and synergisms among all component

algorithms and methods at the overall system level. Further, the

interactions of the system with its external systems and the dynamic demands

of the operational environment on the system must be included in a MBSE

system level model and evaluated for tradeoffs.

An OpEMCSS system level model provides the structure and ontology (top level

formalisms) needed to connect detailed component models for MBSE. The MBSE

approach presented in this tutorial is: (1) apply the OpEM top-down systems

design methodology, (2) perform system concept and top level design

tradeoffs to optimize stakeholder requirements using OpEMCSS, (3) produce a

systems design specification that includes component interface and

qualification system requirements using a design capture database tool, (4)

develop component detailed models of alternative component algorithms and

methods using the OpEMCSS special blocks, (5) perform virtual systems

integration and system Verification & Validation (V&V) using the system

level OpEMCSS simulation, and (6) determine impact of requirements changes

and conduct detailed design trades using the system level OpEMCSS

simulation.

The OpEMCSS graphical simulation library works with the popular commercial

software tool, ExtendSim (www.ImagineThatInc.com), which was chosen for two

major reasons. First of all, ExtendSim is relatively inexpensive for people

to buy and use. Further, the ExtendSim DEMO program is available free from

Imagine That Inc.

The OpEMCSS icon-blocks automatically provide more than 95% of all

simulation code that in the past had to be programmed by hand. In

context-sensitive systems, these programming details are very complex and

would otherwise require extensive programming skill and effort to

accomplish. ExtendSim, with the OpEMCSS library, gives systems practitioners

the ability to experiment with complex, context-sensitive interactions and

quickly build a model. Time is not wasted dealing with complex programming

details and writing extensive code, but rather the emphasis is on complex

systems design, analysis, and evaluation for MBSE.

ExtendSim +OpEMCSS can be used in any field that is concerned with entities

that perform a set of tasks that lead to satisfaction of a measurable goal

that may or may not be explicitly known or stated. Such fields include

project management, systems engineering, software engineering, industrial

engineering, business organizations, societal systems and sociology,

biological and ecological systems, economic systems, and others. Thus, this

tutorial is designed for a broad spectrum of people who wish to gain an

understanding of complex systems and MBSE. It will be shown that, although

complex systems have behaviors that are often difficult to understand, the

underlying ExtendSim +OpEMCSS modeling building blocks comprising a complex

system model are simple and easy to understand.

(5) Title: “Requirements

Engineering for Complex Systems”

Presenter: Dr. Armin Eberlein

Duration of Tutorial:

Half-day

Abstract

This tutorial

addresses the early life cycle of system development and its effect on later

stages of the life cycle. Assuming that the primary measure of project

success is the extent to which the system meets the users’ needs, the

determination of these needs is critical. A flawlessly working system that

does not meet the users’ needs is considered a failed project. How much

requirements engineering is needed? Should we use traditional approaches or

agile approaches? This tutorial aims at demystifying the challenges related

to requirements. It shows the importance of getting just enough requirements

to have a sufficient understanding of the system in order to start its

development. The requirements engineering process will be introduced

together with the activities involved, such as requirements elicitation,

analysis, documentation, validation and management. The tutorial will focus

on techniques that can be used to improve each one of these stages. The

techniques include stakeholder identification and profiling, interviewing,

traceability techniques, reviews, requirements testing, requirements

management, requirements change, tools, prototyping, etc. Emphasis will also

be placed on how to handle nonfunctional quality requirements. The tutorial

points out situations in which the various requirements engineering

techniques are most applicable. Using all the techniques all the time and

going for more-and-more complex approaches is just as detrimental to project

success as ignoring requirements engineering completely. The aim is to find

the most efficient set of requirements engineering techniques for the

project at hand. With the help of an industrial case study, the tutorial

will show how a customized development process that contained just-enough

requirements engineering was used to maximize the benefits. The success of

this project is shown by comparing it with another, similar project that did

not use a proper requirements engineering process.

(6) Title_6a: “New Trends in

Safety Engineering”

Presenter: Dr. Hossam A. Gabbar

Duration of Tutorial:

Half-Day

Abstract

Accidents are

still occurring in industrial systems, which cause harm to human, facility,

and the environment. Manufacturing and production organizations are seeking

better ways to ensure safety. This tutorial explores advanced methods and

best practices in safety engineering, which includes safety system design,

process safety management, safety life cycle, fault simulation, qualitative

and quantitative fault diagnosis techniques, risk assessment and management,

safety verification, safety knowledge management and decision support, and

computational methods for safety systems. Participants will have hands on

practice using international standards of IEC-61508, ISA-S84, ISA-S88,

ISA-S95, and other related safety standards that will be explained using

selected processes from oil & gas, energy systems, production and

manufacturing systems. Participants will acquire essential knowledge and

hands on experience about risk calculation, treatment, management, and their

applications on the design and verification of recovery, startup/shutdown,

and disaster operating procedures. They will acquire knowledge about

accident / incident analysis and management using knowledgebase systems and

computational intelligence techniques.

(6) Title_6b: “Risk-Based

Design & Evaluation of Green Hybrid Energy Supply Chains”

Presenter: Dr. Hossam A. Gabbar

Duration of Tutorial:

Half- Day

Abstract

All nations are

seeking cleaner and cheaper energy systems to cover their local and regional

energy needs using combined hybrid energy technologies. This tutorial will

enable energy practitioners and professionals to learn engineering design

methods and best practices to model and evaluate difference energy supply

chain scenarios using all possible renewable energy sources, such as sun,

wind, nuclear, geothermal, water, and biomass. Participants will learn

modeling and simulation techniques, risk-based design, and process control

and operation synthesis of energy supply chain with the considerations of

safety, health, and environmental management. Attendees will acquire

essential knowledge on qualitative and quantitative process optimization to

achieve best energy supply chain scenarios in view of local renewable

sources, energy demand, and conversion technologies. Case studies will be

analyzed to identify possible energy supply chain scenarios and their

implementation using smart grid controllers.

(7) Title: “Frequency-time

discrete transforms and their applications in image processing”

Presenter: Artyom Grigoryan

Duration of Tutorial:

Half-Day

Abstract

The goal of this tutorial is to present the theory of splitting of fast

2-D Fourier transforms in time-frequency domain and their applications in

image processing, namely in multiresolution, image filtration, image

reconstruction from their projections, image enhancement and encryption. We

focus on a novel approach for efficient calculation of 2-D unitary

transforms, which relates to the construction of decomposition, namely the

universal transition to the short unitary transforms with minimum

computational complexity. The core of this course is the tensor

representation and its modification, the paired representation of the 2-D

images with respect to the Fourier transform.

The paired transformation

provides frequency and time representation of 2-D images, but it is not a

wavelet transform. The basic functions of the paired transforms are defined

by linear integrals (sums) along specific parallel directions in the spatial

domain. Therefore it is possible to decompose the image by its direction

images and define a multiresolution map which can be used for image

processing and enhancement. Many examples and MATLAB-based programs

illustrating the proposed concepts of the tensor and paired forms of image

representation and their implementations in image enhancement and image

reconstruction are demonstrated as well. We also introduce novel concepts of

the mixed and parameterized elliptic Fourier transforms which can be used in

signal and image processing, including image cryptography. This course

introduces the reader new concepts and methods in Fourier analysis in

advanced digital image processing. It will help readers to use new forms of

representation and their effective applications in 2-D image processing, as

well as in multidimensional image processing in the frequency-and-time

domain.

(8) Title_8a: “Model a

Discourse and Transform it to Your User Interface”

Presenter:

Hermann Kaindl

Duration of Tutorial: Half-Day

Abstract

Every interactive system needs a user interface, today possibly even

several ones adapted for different devices (PCs, PDAs, mobile phones).

Developing a user interface is difficult and takes a lot of effort, since it

normally requires design and implementation. This is also expensive, and

even more so for several user interfaces for different devices. This

tutorial shows how human computer interaction can be based on discourse

modeling, even without employing speech or natural language. Our discourse

models are derived from results of Human Communication theories, Cognitive

Science and Sociology. Such discourse models can specify an interaction

design. This tutorial also demonstrates how such an interaction design can

be used for model driven generation of user interfaces and linking them to

the application logic and the domain of discourse.

(8) Title_8b: “Combining

Requirements and Interaction Design through Usage Scenarios”

Presenter: Hermann Kaindl

Duration of Tutorial:

Half Day

Abstract

When the requirements and the interaction design of a system are

separated, they will most likely not fit together, and the resulting system

will be less than optimal. Even if all the real needs are covered in the

requirements and also implemented, errors may be induced by human computer

interaction through a bad interaction design and its resulting user

interface. Such a system may even not be used at all. Alternatively, a great

user interface of a system with features that are not required will not be

very useful as well. Therefore, we argue for combined requirements

engineering and interaction design, primarily based on usage scenarios.

However, scenario-based approaches vary especially with regard to their use,

e.g., employing abstract use cases or integrating scenarios with functions

and goals in a systematic design process. So, the key issue to be addressed

is how to combine different approaches, e.g., in scenario-based development,

so that the interaction design as well as the development of the user

interface and of the software internally result in an overall useful and

useable system. In particular, scenarios are very helpful for purposes of

usability as well.

(9) Title: “Soft Computing

For Biometrics Applications: A Comprehensive Overview”

Presenter: Dr. Marian S. Stachowicz

Duration of Tutorial:

Half- day

Abstract

The goal of soft computing is to create systems, which can handle

imprecision, uncertainty, and partial information while preserving

robustness, tractability, and low solution cost. The ideal model for such a

system is the human brain. Three of the principal components of soft

computing are fuzzy systems, neural computing, and genetic algorithms.

Different combinations of these principals can be used in a complementary

fashion to produce powerful systems for modeling, diagnosis, and control.

Various biometric technologies are available for identifying or verifying an

individual by measuring fingerprint, hand geometry, face, signature, voice,

or combination of these traits. Because a biometric feature cannot be

captured in precisely the same way twice, biometric matching is never exact.

The matching is always a fuzzy comparison. This characteristic makes soft

computing an ideal approach for solving different biometric problems. This

user-friendly tutorial is a valuable resource to introduce professionals

from many disciplines to the broad applicability of Soft Computing to

several areas of human affairs.

(10) Title: “New Global

Optimization Techniques Based on Computational Intelligence”

Presenter:

Dr. Mark Wachowiak

Duration of Tutorial: Half-day

Abstract

Global optimization techniques from computational intelligence, such as

particle swarm and ant colony methods, have become increasingly important in

systems science and engineering, and in human-machine systems. For example,

complex systems are often modeled as large systems of equations, in which

model parameters are determined to correspond with experimental data. Global

optimization is used to find these parameters. Furthermore, many important

problems from systems science rely on simulation-based or multi-objective

optimization, wherein the cost function itself is formed from the results of

large simulation experiments. Although derivative-based methods are

generally agreed to be preferred strategies, in simulation-based

optimization, closed-form derivatives of the objective function are

generally not available, and are not easily computed. As a result, new

optimization paradigms must be considered to solve these problems. The

purpose of this tutorial is to introduce the motivations for these new

optimization strategies – in particular, swarm intelligence, quantum

particle swarm, ant colony optimization, new concepts in evolutionary

computation, and hybrid deterministic-stochastic paradigms – their

fundamental theoretical principles, implementation issues, and recent novel

applications. The adaptation of these innovative techniques to new multi-

and many-core computers, which are becoming increasingly popular and

inexpensive, will also be addressed. Visualization-guided and interactive

optimization will also be demonstrated.

(11) Title: “Intelligent

Pattern Recognition and Applications on Biometrics in Interactive Learning

Environment”

Presenter: Dr. Patrick Wang

Duration of Tutorial:

Half-day

Abstract

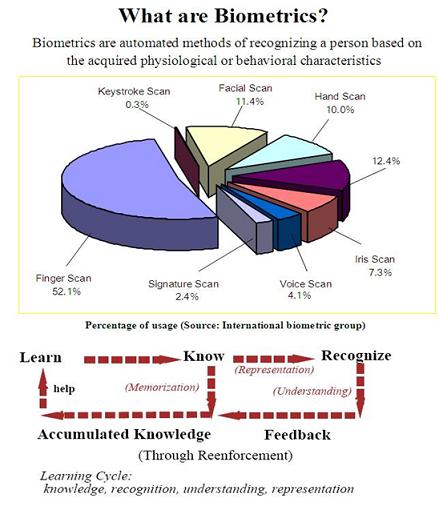

This talk deals

with some fundamental aspects of biometrics and its applications. It

basically includes the following: Overview of Biometric Technology and

Applications, Importance of Security: A Scenario of Terrorists Attack, What

are Biometric Technologies? Biometrics: Analysis vs Synthesis, Analysis:

Interactive Pattern Recognition Concept, Importance of Measurement and

Ambiguity, How it works: Fingerprint Extraction and Matching, Iris, and

Facial Analysis, Authentication Applications, Thermal Imaging: Emotion

Recognition. Synthesis in biometrics, Modeling and Simulation, and more

Examples and Applications of Biomedical Imaging in Interactive Fuzzy

Learning Environment. Finally, some future research directions are

discussed.

(12) Title:e:: “Role-Based

Collaboration”

Presenter: Dr. Haibin Zhu

Duration of Tutorial:

Half- day

Abstract

This tutorial aims at promoting the research and application of

role-based collaboration and attracting more researchers and practitioners’

interests. RBC is a computational thinking methodology. It is an emerging

technology that mainly uses roles as underlying mechanisms to facilitate

abstraction, classification, separation of concern, dynamics, and

interactions. It will find wide applications in different fields, such as,

organizations, management systems, systems engineering, and industrial

engineering. It is generally relevant to many research and engineering

fields including Software engineering, Computer Security, Collaborative

Intelligent Systems and Social Psychology (Fig. 1). The goal of special RBC

to improve collaboration among people based on computers. CSCW

(Computer-Supported Collaborative Work) systems are computer-based tools

that support collaborative activities and should meet the requirements of

normal collaboration. They should not only support virtual face-to-face

collaborative environment but also improve face-to-face collaboration by

providing more mechanisms to overcome the drawbacks of face-to-face

collaboration. The extended goal of general RBC is to improve collaborations

among objects including humans, systems, and system components. Roles can be

used to improve the collaboration methodologies, upgrade the management

efficiencies, keep the consistencies of systems, and regulate the behaviors

of system components and systems.

In this tutorial, we hope to clarify the terminology of role-based

collaboration and answer the following questions: What do we mean by roles

in collaboration? What is role-based collaboration (RBC)? Why do we need

RBC? How can we support RBC? What are the emerged and potential applications

of RBC? What are the emerged and potential benefits? What are the challenges

and difficulties?

At first the current situation of CSCW research and the applications of role

concepts are reviewed, and the reasons of proposing role-based collaboration

is explained. Then, a synthetic view of roles in collaboration is proposed.

Based on the role concept, the general process of role-based collaboration

is demonstrated. To support role-based collaboration efficiently, the

architecture of role based collaborative systems and the system model

E-CARGO are described. To demonstrate the application of RBC, two case

studies of RBC applications are presented: role-based multi-agent systems

and role-based software development. To show that RBC is significant and

practical, the role transfer problem is explained, an initial solution is

presented and a copyrighted software is demonstrated. At last, potential

applications of RBC and challenges that need further research are predicted.

(13) Title: “Neurodynamic

Optimization: Mathematical Models and Selected Applications”

Presenter: Dr.

Jun Wang

Duration of Tutorial:

Half-day

Abstract

Optimization

problems arise in a wide variety of scientific and engineering applications.

It is computationally challenging when optimization procedures have to be

performed in real time to optimize the performance of dynamical systems. For

such applications, classical optimization techniques may not be competent

due to the problem dimensionality and stringent requirement on computational

time. One very promising approach to dynamic optimization is to apply

artificial neural networks. Because of the inherent nature of parallel and

distributed information processing in neural networks, the convergence rate

of the solution process is not decreasing as the size of the problem

increases. Neural networks can be implemented physically in designated

hardware such as ASICs where optimization is carried out in a truly parallel

and distributed manner. This feature is particularly desirable for dynamic

optimization in decentralized decision-making situations. In this talk, we

will present the historic review and the state of the art of neurodynamic

optimization models and selected applications. Specifically, starting from

the motivation of neurodynamic optimization, we will review various

recurrent neural network models for optimization. Theoretical results about

the stability and optimality of the neurodynamic optimization models will be

given along with illustrative examples and simulation results. It will be

shown that many computational problems can be readily solved by using the

neurodynamic optimization models including k winner-take-all, linear

assignment, shortest-path routing, data sorting, robot motion planning,

robotic grasping force optimization, model predictive control, etc.