Evan Dong

Introduction

Foreground object detection is an essential task in many image processing and image understanding algorithms, in particular for video surveillance. Background subtraction is a commonly used approach to segment out foreground objects from their background. In real world applications, temporal and spatial changes in pixel values such as due to shadows, gradual/sudden changes in illumination, etc. make modeling backgrounds a quite difficult task.

In our work, we propose an adaptive learning algorithm of multiple subspaces (ALPCA) to handle sudden/gradual illumination variations for background subtraction.

Datasets

We tested our proposed ALPCA using six different datasets: four benchmark datasets and two datasets that we captured in our ViGIR Lab. The reason for our own datasets is because none of the benchmark datasets available had changes in illumination that were drastic and/or sudden enough to test our algorithm. Here is a short description of each dataset used.

The "Dance" contains more than one thousand frames of a graphically generated indoor scene with two dancing characters. While this sequence contains a somewhat sudden change of illumination, these changes are very subtle, besides this is a synthetic image sequence. This is part of the VSSN 2006 dataset.

Sequence 1: "Dance"

The "Campus" video sequence is part of the PETS 2001 dataset. We used almost four thousand frames, including a variety of gradual illumination change (over the period of a day) and people walking at a reasonably far distance from the camera.

Sequence 2: "Campus"

The "Lobby" is an outdoor video sequence with almost five hundred frames. It is part of the PETS 2004 dataset and it also contains people meeting/chatting at the lobby of the INRIA Lab, in France.

Sequence 3: "Lobby"

The "Subway" is the last of the benchmark dataset used in our tests, and it contains a mix of natural and artificial illumination sources. It is part of PETS 2006 dataset and we used more than fourteen hundred of its frames. It was shot at a subway station and it captured people coming in and out of the station.

Sequence 4: "Subway"

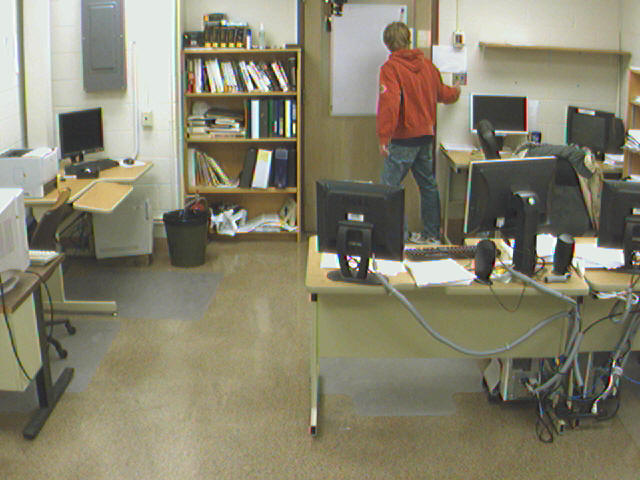

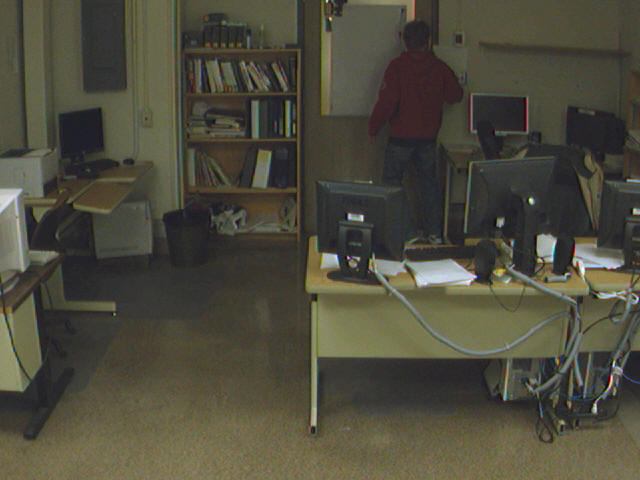

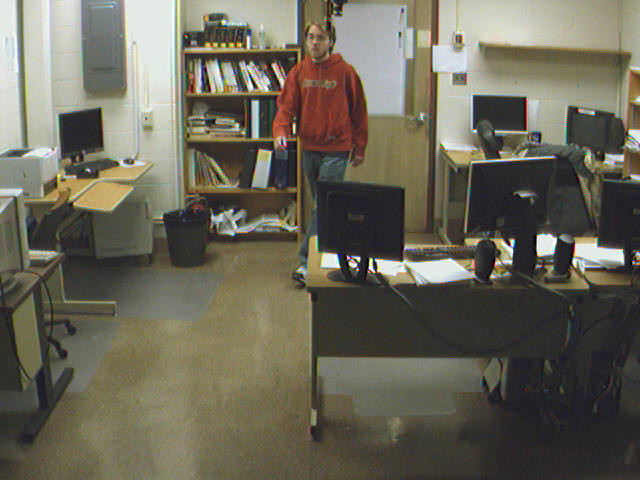

The "Sudden-Change" is the first of our self-created video sequences. It was captured with the purpose of testing our algorithm for drastic and sudden changes in illumination. During this five-hundred-frame video sequence, half of the light fixtures are switched on-and-off separately, creating three different combinations of lighting conditions. A person moves back and forth in front of the camera.

Sequence 5: "Sudden-Change"

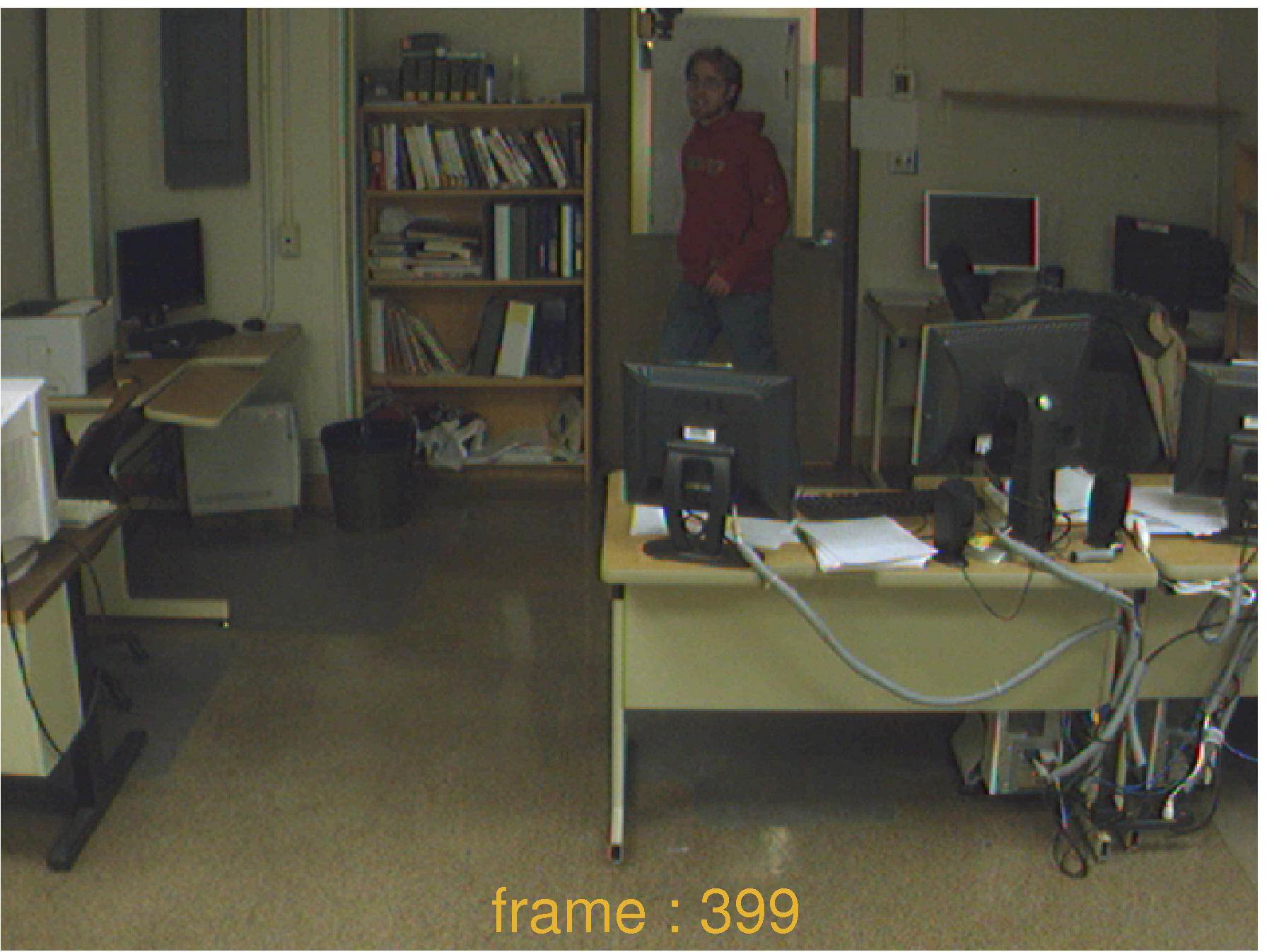

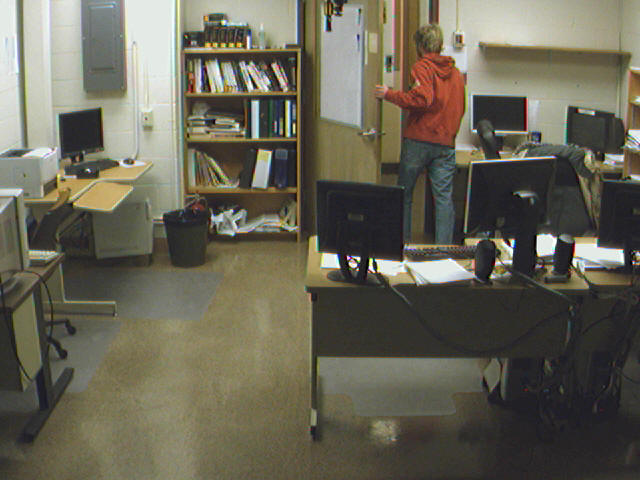

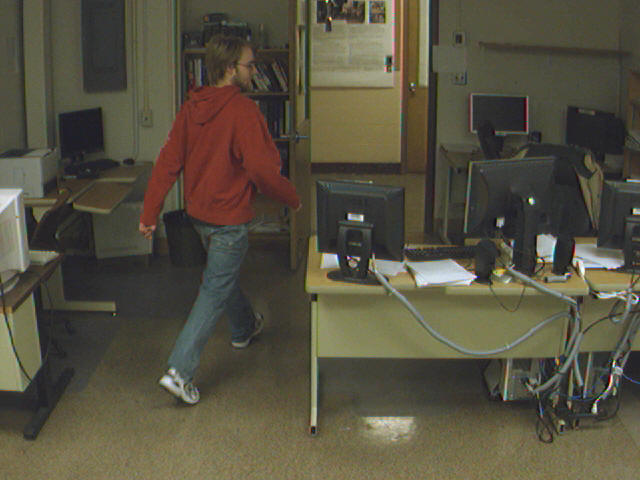

The "Sudden-Change-Door" is a sequence similar to the one above, with the addition of a sudden background change. That is, while some of the lights are switched off, a door in the back of the room is opened and closed. The light from the hallway floods the room, creating yet another set of combinations of lighting conditions.

Sequence 6: "Door"

Results

1. "Dance" (ALPCA) and "Dance" (APCA)

2. "Campus" (ALPCA)

3. "Lobby" (ALPCA)

4. "Subway" (ALPCA)

5. "Sudden-Change" (ALPCA) and "Sudden-Change" (APCA)

6. "Door" (ALPCA)

Reference

Y. Dong and G.N. DeSouza. Adaptive Learning of Multi-Subspace for Foreground Detection under Illumination Changes.

Submitted to Computer Vision and Image Understanding. (2009)

Y. Dong, T. X. Han and G.N. DeSouza. Illumination Invariant Foreground Detection using Multi-Subspace Learning.

Submitted to Machine Vision and Applications. (2009)