Computing With Words—A Paradigm Shift

(Print-Friendly

PDF version)

There are many misconceptions about what Computing

with Words (CW) is and what it has to offer. A common misconception

is that CW is closely related to natural language processing.

In reality, this is not the case. More importantly, at this

juncture what is widely unrecognized is that moving from computation

with numbers to computation with words has the potential for

evolving into a basic paradigm shift—a paradigm shift which

would open the door to a wide-ranging enlargement of the role

of natural languages in scientific theories.

In essence, CW is a system of computation which adds

to traditional systems of computation two important capabilities:

(a) the capability to precisiate the meaning of words and propositions

drawn from natural language; and (b) the capability to reason

and compute with precisiated words and propositions.

As a system of computation, a CW-based model, or

simply CW-model, has three principal components. (a) A question,

Q, of the form: What is the value of a variable, Y? (b) An information

set, I=(p1, ..., pn), where the pi, i=(1, ..., n), are propositions

which individually or collectively are carriers of information

about the value of Y, that is, are question-relevant. One or

more of the pi may be drawn from world knowledge. A proposition,

pi, plays the role of an assignment statement which assigns

a value, vi, to a variable, Xi, in pi. Equivalently, pi may

be viewed as an answer to the question: What is the value of

Xi? Xi and vi, may be explicit or implicit. A proposition, pi,

may be unconditional or conditional, expressed as an if-then

rule. Basically, an assignment statement constrains the values

which Xi is allowed to take. To place this in evidence, Xi and

vi are referred to as the constrained variable and the constraining

relation, respectively. More concretely, what this implies is

that the meaning of a proposition, p, may be represented as

a generalized constraint, X isr R, in which X is the constrained

variable, R is the constraining relation and r defines the modality

of the constraint, that is, the way in which R constrains X.

When vi is a word or a combination of words, Xi is referred

to as a linguistic variable, with vi being its linguistic value.

When it is helpful to stress that pi assigns a value to a variable,

pi is referred to as a valuation. Correspondently, the information

set, I, is referred to as a valuation system, V.

The third component is an aggregation function, f,

which relates Y to the Xi.

Y=f(X1, ..., Xn)

The principal difference between CW and conventional

systems of computation is that CW allows inclusion in the information

set, I, of propositions expressed in a natural language, that

is, linguistic valuations. Legalization of linguistic valuations

has important implications. First, it greatly enhances the capability

of computational methodologies to deal with imperfect information,

that is, information which in one or more respects is imprecise,

uncertain, incomplete, unreliable, vague or partially true.

In realistic settings, such information is the norm rather than

exception. Second, in cases in which there is a tolerance for

imprecision, linguistic valuations serve to exploit the tolerance

for imprecision through the use of words in place of numbers.

And third, linguistic valuations are close to human reasoning

and thus facilitate the design of systems which have a high

level of machine intelligence, that is, high level of MIQ (machine

IQ).

What does Computing with Words have to offer? The

answer rests on two important tools which are provided by the

machinery of fuzzy logic. The first tool is a formalism for

mm-precisiation of propositions expressed in a natural language

through representation of the meaning of a proposition as a

generalized constraint of the form X isr R, where as noted earlier

X is the constrained variable, R is the constraining relation

and r is the modality of the constraint (Zadeh

1986).

The second tool is a formalism for computing with

mm-precisiated propositions through propagation and counterpropagation

of generalized constraints. The principal rule governing constraint

propagation is the Extension Principle (Zadeh

1965,

1975). In combination, these two tools provide an effective

formalism for computation with information described in a natural

language. And it is these tools that serve as a basis for legalization

of linguistic valuations.

What is important to note is that the machinery of fuzzy if-then

rules—a machinery which is employed in almost all applications

of fuzzy logic—is a part of the conceptual structure of CW.

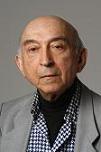

Professor Lotfi A. Zadeh's Biographical information:

|

LOTFI A. ZADEH is a Professor in the Graduate School,

Computer Science Division, Department of EECS, University

of California, Berkeley. In addition, he is serving

as the Director of BISC (Berkeley Initiative in Soft

Computing). Lotfi Zadeh is an alumnus of the University of Tehran, MIT and Columbia University. He held visiting appointments at the Institute for Advanced Study, Princeton, NJ; MIT, Cambridge, MA; IBM Research Laboratory, San Jose, CA; AI Center, SRI International, Menlo Park, CA; and the Center for the Study of Language and Information, Stanford University. His earlier work was concerned in the main with systems analysis, decision analysis and information systems. His current research is focused on fuzzy logic, computing with words and soft computing, which is a coalition of fuzzy logic, neurocomputing, evolutionary computing, probabilistic computing and parts of machine learning. Lotfi Zadeh is a Fellow of the IEEE, AAAS, ACM, AAAI, and IFSA. He is a member of the National Academy of Engineering and a Foreign Member of the Russian Academy of Natural Sciences, the Finnish Academy of Sciences, the Polish Academy of Sciences, Korean Academy of Science & Technology and the Bulgarian Academy of Sciences. He is a recipient of the IEEE Education Medal, the IEEE Richard W. Hamming Medal, the IEEE Medal of Honor, the ASME Rufus Oldenburger Medal, the B. Bolzano Medal of the Czech Academy of Sciences, the Kampe de Feriet Medal, the AACC Richard E. Bellman Control Heritage Award, the Grigore Moisil Prize, the Honda Prize, the Okawa Prize, the AIM Information Science Award, the IEEE-SMC J. P. Wohl Career Achievement Award, the SOFT Scientific Contribution Memorial Award of the Japan Society for Fuzzy Theory, the IEEE Millennium Medal, the ACM 2001 Allen Newell Award, the Norbert Wiener Award of the IEEE Systems, Man and Cybernetics Society, Civitate Honoris Causa by Budapest Tech (BT) Polytechnical Institution, Budapest, Hungary, the V. Kaufmann Prize, International Association for Fuzzy-Set Management and Economy (SIGEF), the Nicolaus Copernicus Medal of the Polish Academy of Sciences, the J. Keith Brimacombe IPMM Award, the Silicon Valley Engineering Hall of Fame, the Heinz Nixdorf MuseumsForum Wall of Fame, other awards and twenty-six honorary doctorates. He has published extensively on a wide variety of subjects relating to the conception, design and analysis of information/intelligent systems, and is serving on the editorial boards of over sixty journals. Professor in the Graduate School, Computer Science

Division |